If you want to make a cinephile cringe, “digital face replacement” is the phrase that pays. “Digital de-aging” and “deepfake” will do the trick, too. While theoretically just the latest addition to the filmmaker’s toolkit, it’s proven to enable some of Hollywood’s ugliest and most cowardly instincts. In an industry already averse to risk and change, digital de-aging and the more dehumanizing practice of outright replacing an unknown actor’s face with a familiar one allows media corporations to lean more than ever on the cheap high of nostalgia.

Of course, any illusion — cinematic or otherwise — is only as good as the magicians creating it. If their intent is merely to dazzle you for a hot second, then it’s just a magic trick. With loftier goals and an artistic hand, a visual effect can be profoundly moving.

Improbably, this year’s best argument for the value of digital face replacement in cinema came from a big-budget Star Trek fan film. 765874: Unification is a 10-minute short produced by effects studio OTOY and The Roddenberry Archive, an online museum founded by Star Trek creator Gene Roddenberry’s son Rod. It follows Captain James T. Kirk after his death in 1994’s Star Trek: Generations, navigating an abstract afterlife and crossing barriers of time and reality to comfort his dying friend, an aged Spock in the image of the late Leonard Nimoy.

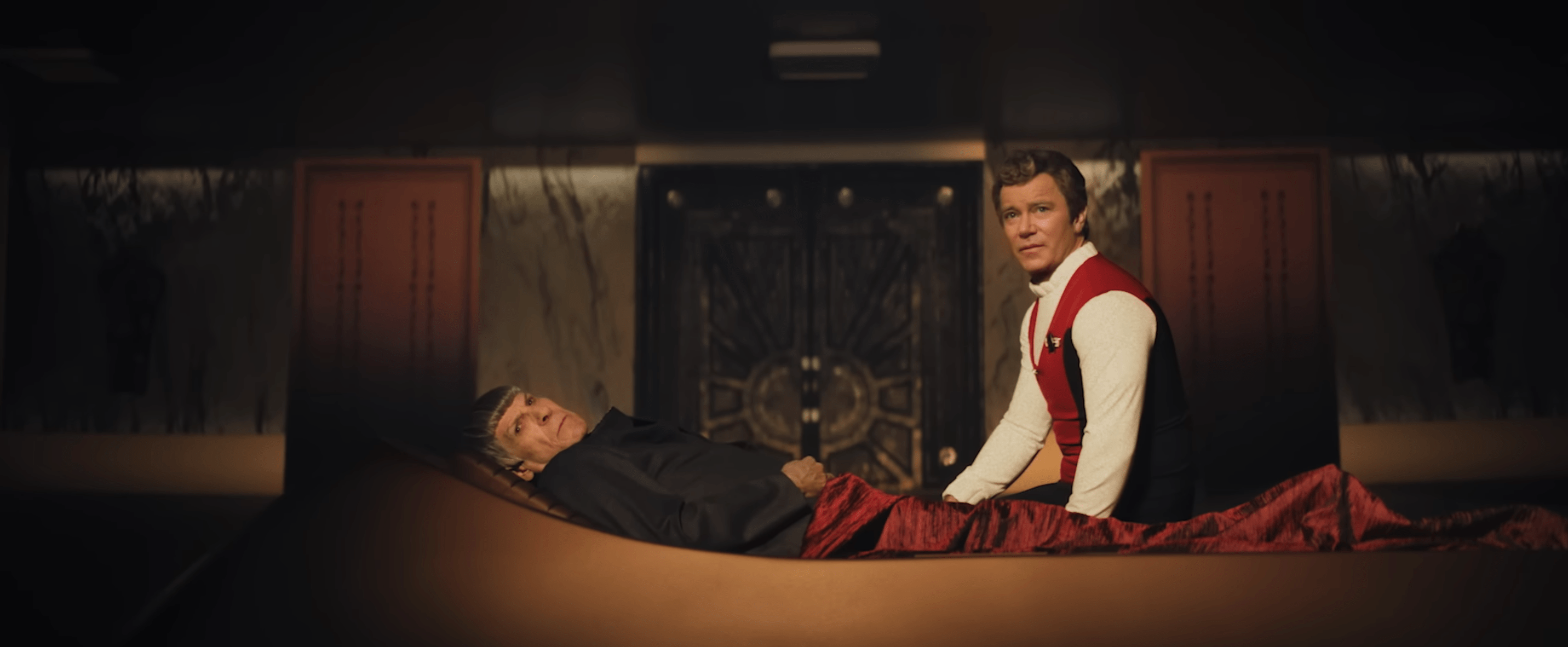

The role of James T. Kirk is portrayed by William Shatner — but also, it isn’t. It’s actually actor Sam Witwer, wearing a digital prosthetic of Shatner’s face circa 1994. This latest generation of digital mask renders in real time, allowing the actor to rehearse in front of a monitor and perfect his performance as he would with a physical makeup effect.

Witwer’s work absolutely pays off. On first viewing, practically any viewer would reasonably assume that the actor on screen is a de-aged William Shatner.

Without seeing it for yourself, you could be forgiven for dismissing Unification as easily as the late Harold Ramis’ cheap, ghostly cameo in Ghostbusters: Afterlife. The difference, however, is in the execution of this story as well as in its purpose. The climax of Ghostbusters: Afterlife sees a digitally resurrected Ramis effectively passing the Proton Pack to a new generation, offering a tacit endorsement of a commercial product that the actor never saw. It’s a mechanically engineered tearjerking moment amid a hollow exercise in nostalgia, a sweaty effort to invest a new generation in Ghostbusters — not the raunchy snobs-versus-slobs comedy, mind you, but the toy line it inspired.

By contrast, Unification is a noncommercial work about putting the past to rest, and saying goodbye to two beloved figures: not Kirk and Spock, but Shatner and Nimoy.

Kirk and Spock, after all, live on, recast twice already on film and television. But this film wouldn’t work if the roles were played by Chris Pine and Zachary Quinto, or Paul Wesley and Ethan Peck, because it’s not really about Kirk visiting Spock on his deathbed. It’s about the 93-year-old Shatner — who also produced the short along with Nimoy’s widow, Susan Bay Nimoy — facing his own death through the lens of his most famous character and finding comfort in the notion that he may be reuniting with the man he once called “brother.” It helps that this is a noncommercial work, but what really makes Unification outstanding is Sam Witwer’s performance. Director Carlos Baena composes something that is somehow both art film and tech demo, hiding the weaknesses of the VFX while trusting Witwer/Shatner’s face and Michael Giacchino’s original score to tell the story.

Like most new technologies, digital makeup hit the market well before the kinks were worked out. Mass audiences got their first obvious look at the process in 2006, when the eerily smoothed-out faces of Sirs Patrick Stewart and Ian McKellen stepped into frame in the prologue of X-Men: The Last Stand. In order to make the two actors, then in their 60s, appear 20 years younger, the production enlisted the VFX house Lola to apply a process that they’d previously employed to “perfect” the skin of pop stars in music videos. The results on the screen were infamously uncanny, but Lola co-founder Greg Strause nevertheless predicted that this work would cause a “fundamental shift” in cinema.

“Writers have stayed away from flashbacks because directors don’t like casting other people,” Strause told Computer Graphics World at the time. “This could break open a fresh wave of ideas that had been off-limits.”

On the one hand, Strause was correct in that digital de-aging enabled storytellers — particularly those working in genres with a higher threshold for suspended disbelief like science fiction or comedy — to expand the utility of certain actors in flashback.

This became one of Marvel Studios’ favorite moves, letting Baby Boomer actors like Michael Douglas or Kurt Russell play 30 or 40 years younger for a few scenes, or Samuel L. Jackson for an entire film. The practice escaped the confines of genre cinema, adopted by Martin Scorsese for a few shots in 2006’s The Departed before the auteur went all in with 2019’s The Irishman, which used a new effects methodology innovated by Pablo Helman and ILM. No longer the specialty of one effects house, digital de-aging has become an industry in itself, with different studios offering different methods on a variety of scales and budgets. It’s everywhere now, from Avatar to The Righteous Gemstones.

In theory, there’s nothing evil about a digital prosthetic. It’s simply another storytelling tool, like practical makeup. Like any visual effect, it works best when you don’t notice it. (If you’d never seen Willem Dafoe before Spider-Man: No Way Home, you’d have no idea he’d been de-aged 19 years; the same can’t be said for Alfred Molina.) However, digital de-aging and face replacement are used more often as features to be appreciated than as effects to be disguised. At the moment, it’s a gimmick, a generous sprinkle of movie magic that makes something impossible — like 58-year-old Nicolas Cage getting a sloppy kiss from 28-year-old Nicolas Cage — possible.

Digital face or head replacement can be used to a unique and interesting effect that preserves the integrity of an actor’s performance, allowing for stories that, as Strause predicted, might not have worked otherwise. The family drama in Tron: Legacy between Jeff Bridges’ aged Kevin Flynn, his estranged biological son Sam (Garrett Hedlund), and his “perfect” digital clone Clu is uniquely compelling in a way that probably would not click if Bridges was not also playing Clu via a process that digitally scanned his performance and rendered a virtual younger Bridges over the on-set performance of John Reardon, who in turn repeated all of Bridges’ takes to complete the illusion.

What makes this sticky is this may be the first time you’ve heard of John Reardon, a working actor in Canada who figures heavily in a big-budget Disney feature but whose face never appears and whose voice is never heard and whose name is way down at the bottom of the acting credits. He’s listed as the “performance double” for Clu and Young Kevin Flynn. In this particular case, Reardon’s obscured role in the film is somewhat justified, as his performance mimicked Bridges’ takes as closely as possible and it’s Bridges who’s wearing a rig on his head and driving Clu’s CGI face. Stunt actors and stand-ins don’t share billing with the principals they’re doubling, and it could be argued that Reardon’s job on Tron: Legacy was not so different.

But as studios — particularly Disney — double down on making each of their intellectual properties a living, everlasting document with an unbroken continuity, the use of digital masks represents a deeply troubling future where the person who’s performing a role is never the star. This industry villain wears the face of one of Hollywood’s most beloved heroes, Luke Skywalker.

In 2020, when a young Luke made a surprise cameo appearance in the second season finale of The Mandalorian, one could easily imagine a media frenzy over Lucasfilm casting a new live-action Luke Skywalker for the first time. Instead, actor Max Lloyd-Jones was buried in the credits as “double for Jedi,” while Mark Hamill, whose face was superimposed onto his but who does not actually appear, received his own title card. When Luke reappeared in The Book of Boba Fett the following year, this time with a full speaking role, a different actor, Graham Hamilton, served as his “double.” In addition to replacing Hamilton’s face — a dead ringer for young Hamill — Luke’s dialogue was created using machine learning to mimic Hamill’s voice circa 1982. Next time Luke appears in a live-action Star Wars work as a digital phantom, he will no doubt be played by another disposable actor whose career will barely benefit, while the Disney-owned intellectual property that is Luke Skywalker remains a household name.

Of course, we’ll also never know whether or not Max Lloyd-Jones or Graham Hamilton have the chops to succeed Mark Hamill as Luke Skywalker because, in both of Young Luke’s television appearances, he does as little as possible, since the digital mask looks less convincing the more “Luke” speaks or emotes. Neither actor got the chance to do anything with the character to demonstrate either their own spin or even the perfect mimicry that Star Wars obsessives would no doubt prefer. The irony here is that, as is the case with practical makeup effects or full-body performance capture, the only thing that can really sell digital de-aging or full face replacement is great acting.

2024 saw Robert Zemeckis, a filmmaker who is constantly pushing the limits of technology to indulge his bizarre storytelling whims, hinge an entire film on digital de-aging, casting Tom Hanks and Robin Wright to play high school sweethearts all the way through to old age in his new feature Here. Beyond the novelty of the gimmick, avoiding recasting characters at different ages helps to keep Here’s unconventional narrative legible as it bounces back and forth between decades and centuries. Here is a hokey and heavy-handed affair, but the digital effects never feel as if they’re a hindrance to their performances. Their digital masks, created using deepfakes from the hundreds of hours of footage available from their long careers, are among the best the big screen has seen so far. But the actors are also physically selling their characters’ different ages the way that stage and film actors have been doing for generations. It’s imperfect, but it’s sincere and informed by all the tiny decisions that actors make about their characters and their off-screen lives while preparing for a role.

It’s that same element that made Andy Serkis’ performance as Gollum in The Lord of the Rings a watershed moment in cinema, and that continues to make the rebooted Planet of the Apes franchise exciting even after his departure. This year’s Kingdom of the Planet of the Apes, like its Serkis-led predecessors, shines not for its incredibly rendered sentient ape effects but for the way those effects disappear into the characters they represent. Peter Macon may not get recognized on the street for his voice and mo-cap performance as the endearing orangutan philosopher Raka, but there’s no debating whose performance it is.

Contrast this against one of the year’s most widely criticized special effects: the late Ian Holm’s ill-advised cameo as the decapitated android Rook in Alien: Romulus. Director Fede Álvarez made an effort to avoid the digital uncanny valley by commissioning an animatronic Rook made from a cast of Ian Holm’s head (with the permission of Holm’s family), but a layer of VFX was added overtop of it that actually compounded the problem.

It’s hard to say which was more distracting — the effect, or the mere presence of the Holmunculus itself. The narrative doesn’t require Rook to resemble an established character; it was simply an Easter egg turned rotten, an expensive effect that failed where an actor would have done just fine.

Like so many filmmakers before who’ve whiffed on ambitious special effects, Álvarez and company may simply have succumbed to the temptation to use a cool new filmmaking toy. This impulse, if indulged, ends up hurting not only their respective films but the reputation of the technology as a whole.

In an interview with TrekCulture about 765874: Unification, Sam Witwer was quick to push back against the notion that the short’s transformative digital makeup process would spread like wildfire — not despite his involvement in its development, but because of it. “It will grow so long as it’s done well. You’ll recall that when Jurassic Park came out, people were pretty high on CGI, because it was impeccably done. Then it got into the hands of people who didn’t do it as well, and ‘CGI’ was a bad word for a while. It’s all about the artists. In the case of OTOY, they trusted that an actor was an integral part of that team.”

There is a great deal of well-justified anxiety in the art world over the general public’s apparent indifference about whether a piece of “content” is created by people or by artificial intelligence. The ability to enter a prompt into a piece of software and have it generate infinite variations on something you already like has widespread appeal, but it’s also incredibly shallow. 765874: Unification is, superficially, the kind of story a Trekkie might try to generate via AI, a “fix-it fic” starring two actors who no longer exist as we remember them. But there’s nothing you can type into a machine that is ever going to result in a film like this.

For as much as Unification is a weird, lyrical jumble of deeply obscure Star Trek lore, it’s also a minor cinematic miracle. If something like this can exist and bring a tear to the eye of the most jaded, critical viewer, then the technology behind it doesn’t have to represent a creative doomsday. Employed with purpose and human emotion and performance behind it, it can create something unique and beautiful.

Source:https://www.polygon.com/opinion/498387/star-trek-unification-deepfake-de-aging-history-culture